[텍스트마이닝] 조바이든 대통령 취임식 연설문 전처리 후 워드클라우드 그리기

조 바이든 대통령 취임식 연설문 워드클라우드

Python을 이용하여 조 바이든 대통령 취임식 연설문을 텍스트 분석해 보았습니다.

아래 파일은 제가 실습에 사용한 영어 원문 텍스트 자료입니다.

csv 파일의 경우 엑셀을 이용해 마침표 기준으로 텍스트를 나누었고, 그 외 전처리는 모두 파이썬을 활용했습니다.

1. 데이터 준비

- 패키지 불러오기

import pandas as pd

import numpy as np

import sklearn # 특징 추출

import re # 정규식

from nltk.tokenize import word_tokenize # 단어 토큰화

from nltk.corpus import stopwords # 불용어

from nltk.stem import PorterStemmer # 어간추출

from gensim import corpora # gensim - topic modeling 라이브러리, corpora - corpus 복수형

from sklearn.feature_extraction.text import CountVectorizer # 숫자화

from wordcloud import WordCloud, STOPWORDS # 워드클라우드, 불용어 처리

import matplotlib.pyplot as plt # 시각화

- 데이터 불러오기

# csv 파일

from google.colab import drive

drive.mount('/content/drive')

data = pd.read_csv('파일경로', encoding='cp949').address

# 텍스트 파일

from google.colab import drive

drive.mount('/content/drive')

f = open('파일경로',"r",encoding="UTF-8") # r 읽기모드

text = f.read()

f.close()

2. 전처리

csv 데이터를 불러와 전처리

- 불용어, 어간추출 사전 정의

stopWords = set(stopwords.words("english"))

stemmer = PorterStemmer()

- for 결과 담을 빈 words 리스트 생성

words = []

- 소문자화, 토큰화, 불용어 제거, 어간추출

for doc in data :

tokenizedWords = word_tokenize(doc.lower()) # 소문자 처리

print(tokenizedWords) # 소문자로 통일된 결과만

stoppedWords = [ w for w in tokenizedWords if w not in stopWords] # tokenizedWords에 있는 단어 계속, 만약 그 단어가 stopwords에 있는 단어가 아니면 stoppedwWords에 넣어라

stemmedWords = [stemmer.stem(w) for w in stoppedWords] # 위에서 처리된 단어를 어간추출

words.append(stemmedWords)data에 있는 문장들을 doc에 하나씩 넣어 살펴봄

소문자 처리 후 토큰화 하여 tokenizedwords에 넣음

tokizedwords에서 단어를 stopwords에 있는 단어가 아니면 stoppedwords에 넣어라(불용어 뺀 나머지)

불용어 처리된 것을 어간추출하여 stemmedwords에 넣음

마지막에 정제된 것은 words에 붙여 넣어라

- 문자열에서 알파벳만 남기기

alp_words = re.sub(r"[^a-zA-Z]", " ", str(words))

alp_words

re.sub(정규 표현식, 치환 문자, 대상 문자열)

^ 아닌 것과 매치, [a-zA-Z] : 알파벳 모두

+) 정규 표현식 참고

Regular Expression HOWTO

Author, A.M. Kuchling < amk@amk.ca>,. Abstract: This document is an introductory tutorial to using regular expressions in Python with the re module. It provides a gentler introduction than the corr...

docs.python.org

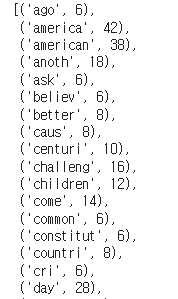

3. 빈도분석

- 토큰화

cv = CountVectorizer(max_features=100, stop_words='english').fit([alp_words])CountVectorizer : 문서 집합에서 단어 토큰을 생성하고 각 단어의 수를 세어 BOW 인코딩 벡터를 만듦

- max_features 최대 몇 개 가져올 것인지 (높은 순으로)

sklearn에 있는 stopwords, 위에서는 nltk의 stopwords ~ 안 겹치는 거 있으면 빠지도록

- DTM 형태로 변환

dtm = cv.fit_transform([alp_words])fit_transform : 데이터가 가지고 있는 정보는 유지하며 데이터를 변환

형태 변환한 것을 dtm으로 저장

- feature 단어 목록 확인

alp_words = cv.get_feature_names()

alp_wordsget_feature_names : DTM에 사용된 feature 단어 목록

- dtm 단어 합계

count_mat = dtm.sum(axis=0) # 세로 합계

count_matdtm의 자료를 합쳐서 세로로 count_mat에 넣음

- 단어, 빈도수를 합쳐서 리스트로 만듦

count = np.squeeze(np.asarray(count_mat)) # array형태로 차원을 줄여라

word_count = list(zip(alp_words, count)) # 단어와 count 를 list 형태로 쌍을 이루도록 묶음

word_countsqueeze : 차원을 축소 (matrix 행렬 -> array 배열 형태로 변환)

zip : 동일 개수로 이루어진 자료형 묶어주는 함수

-> zip으로 반환됨 -> list(zip(리스트1, 리스트2)) list 형태로 변환

- 내림차순 정렬

word_count = sorted(word_count, key=lambda x:x[1], reverse=True)워드클라우드 작성위해 큰 것 먼저 나오도록 정렬하여 새로운 리스트 반환

sorted(정렬할 데이터, key 정렬 기준, reverse 오름/내림)

- reverse=True 내림차순 / reverse=false,생략 : 오름차순

lamvda : 바로 정의하여 사용할 수 있는 함수-

-> (key 인자에 함수를 넘겨주면 해당 함수의 반환값을 비교하며 순서대로 정렬)

4. 워드클라우드

- 워드클라우드 설정

wc = WordCloud(background_color='black', width=800, height=600)배경색=블랙, 넓이=800, 높이=600

- 단어 빈도 사전

cloud = wc.generate_from_frequencies(dict(word_count)) # 빈도(사전형태())

- 워드클라우드 그리기

plt.figure(figsize=(12,9)) # plt 그림 그리기 (사이즈)

plt.imshow(cloud) # 클라우드 그리기

plt.axis('off') # 좌표값(축) 끄기

plt.show() # 보여줌

텍스트를 간단하게 전처리한 뒤 단어 빈도를 기준으로 워드클라우드를 그려보았습니다. 자주 언급되는 상위 100개의 단어들을 기준으로 하였으나 과연 이 단어들 자체를 특별하고 중요하다 볼 수 있는지는 의문입니다. 또한 어간 추출이 매끄럽지 못 하며 american, america와 같은 유사어의 경우에는 사전을 따로 정의하는 것이 좋을 것 같습니다.

따라서 여러 단어가 특정 문서 내에서 얼마나 중요한 것인지를 나타내는 TF-IDF를 이용해 보는 것도 좋을 것 같습니다. 단순히 '단어가 흔하게 등장하는 것'이 아닌 '문서 내에서 독특하게 사용된 단어'들을 파악하여 텍스트를 빠르게 파악할 수 있지 않을까 기대가 됩니다.

'Ability 🌱 > Python' 카테고리의 다른 글

| [텍스트마이닝] LDA와 Topic Modeling 개념 및 활용 (1) | 2023.01.16 |

|---|---|

| [텍스트마이닝] 감정점수를 계산하여 Sentiment Analysis 후 시각화 하기 (0) | 2023.01.06 |

| [Python] pandas 함수 (0) | 2022.04.21 |

| [Python] 자료 유형, 조건문(if), 반복문(for/while) (0) | 2022.04.20 |

| [Python] 파이썬 다운로드 & 간략 소개 (0) | 2022.04.05 |